The Most Powerful Compute Platform for

Every Workload

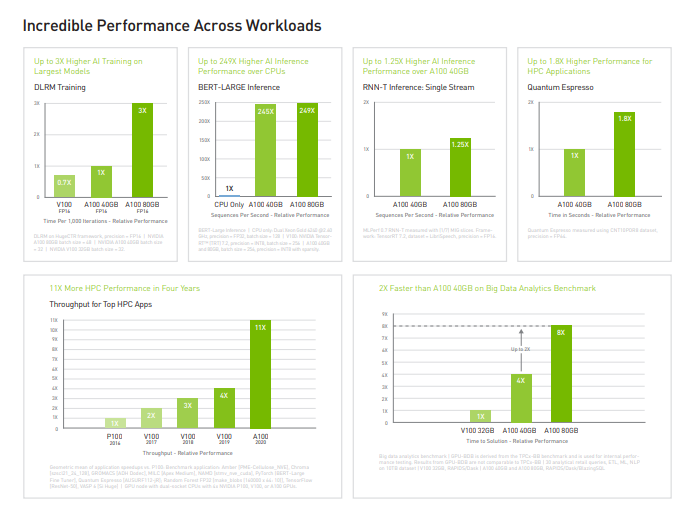

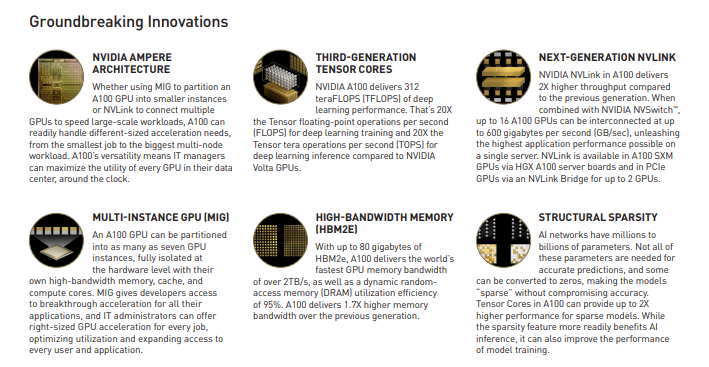

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and highperformance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20X higher performance over the prior NVIDIA Volta™ generation. A100 can efficiently scale up or be partitioned into seven isolated GPU instances with Multi-Instance GPU (MIG), providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

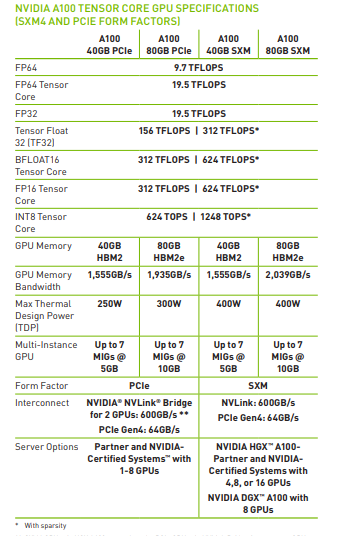

NVIDIA A100 Tensor Core technology supports a broad range of math precisions, providing a single accelerator for every workload. The latest generation A100 80GB doubles GPU memory and debuts the world’s fastest memory bandwidth at 2 terabytes per second (TB/s), speeding time to solution for the largest models and most massive datasets. A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from the NVIDIA NGC™ catalog. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale

The NVIDIA A100 Tensor Core GPU is the flagship product of the NVIDIA data center

platform for deep learning, HPC, and data analytics. The platform accelerates over

2,000 applications, including every major deep learning framework. A100 is available

everywhere, from desktops to servers to cloud services, delivering both dramatic

performance gains and cost-saving opportunities

OPTIMIZED SOFTWARE AND SERVICES FOR ENTERPRISE

NVIDIA HGX SPECIFICATIONS

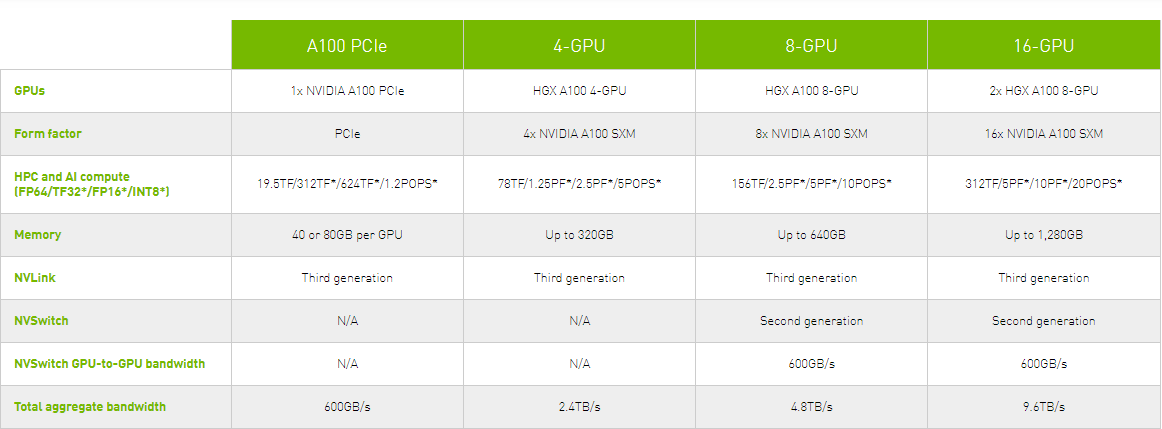

NVIDIA HGX is available in single baseboards with four or eight A100 GPUs, each with 40GB or 80GB of GPU memory. The 4-GPU configuration is fully interconnected with NVIDIA NVLink®, and the 8-GPU configuration is interconnected with NVSwitch. Two NVIDIA HGX A100 8-GPU baseboards can be combined using an NVSwitch interconnect to create a powerful 16-GPU single node.

HGX is also available in a PCIe form factor for a modular, easy-to-deploy option, bringing the highest computing performance to mainstream servers, each with either 40GB or 80GB of GPU memory.

This powerful combination of hardware and software lays the foundation for the ultimate AI supercomputing platform.

Accelerating HGX with NVIDIA Networking

With HGX, it’s also possible to include NVIDIA networking to accelerate and offload data transfers and ensure the full utilization of computing resources. Smart adapters and switches reduce latency, increase efficiency, enhance security, and simplify data center automation to accelerate end-to-end application performance.

The data center is the new unit of computing, and HPC networking plays an integral role in scaling application performance across the entire data center. NVIDIA InfiniBand is paving the way with software-defined networking, In-Network Computing acceleration, remote direct-memory access (RDMA), and the fastest speeds and feeds.