Over the last 15 years, High Performance Computing (HPC) has always been one of the fastest-growing IT markets with growth higher than the year-over-year growth of online games and tablets.

HPC is done primarily by using many processors or computers within a cluster and executed with the application of a parallel algorithm. By dividing tasks with large data into subsets based on certain rules, scientists are able to compute with different proces- sors or nodes within a cluster before eventually compiling the results with a significant reduction in computational time.

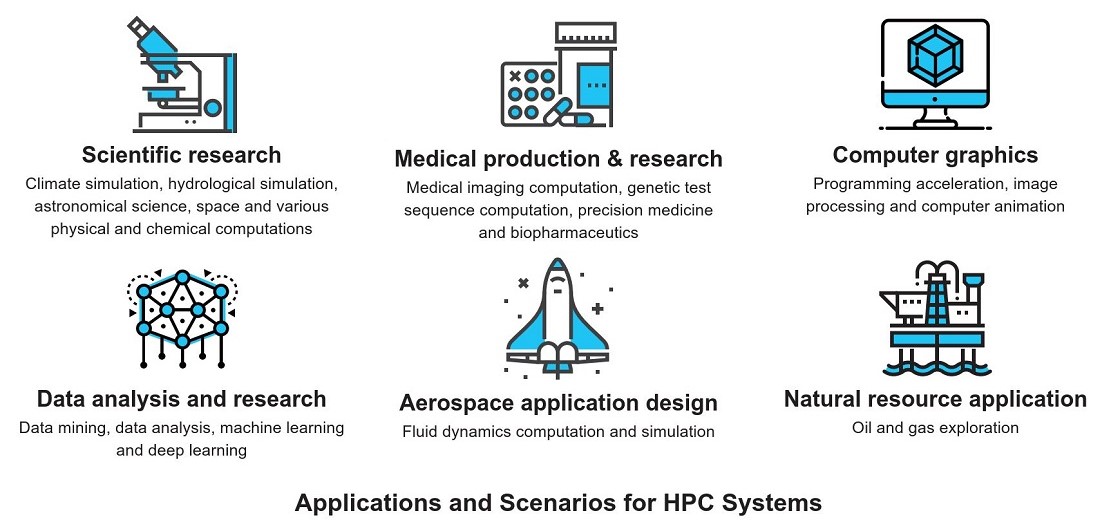

The applications of HPC systems are generally those large-scale computations beyond the reach of ordinary computers, including medical imaging, genetic analysis and comparison, computer animation, energy exploration, climate simulation, astronomy, space, and various physical and chemical computations. The nodes of a HPC system work in parallel, i.e. they have to compute while processing tasks as well as exchange data with each other during processing. The performance of a HPC system is proportional to the scale of the given cluster. In contrast, the performance of a set of HPC systems is the sum of the processing capacities of the nodes within the given cluster. High Performance Computing (HPC) The applications of HPC systems are generally those large-scale computations beyond the reach of ordinary computers, including medi- cal imaging, genetic analysis and comparison, computer animation, energy exploration, climate simulation, astronomy, space, and various physical and chemical computations. The nodes of a HPC system work in parallel, i.e. they have to compute while processing tasks as well as exchange data with each other during rocessing. The performance of a HPC system is proportional to the scale of the given cluster. In contrast, the performance of a set of HPC systems is the sum of the processing capacities of the nodes within the given cluster. There are many classifications applicable to HPC systems, but typically they can fall into one of three types of classifications. Symmetric Multi-Processing (SMP) A system where all the CPUs are identical and share the same main memory is called Shared Memory Multi-Processor System. It can be used for applications that require only a few processors, yet large-scale data computation and analysis are needed. Its advantages include simple system architecture, easy management and easy to compile parallel computing programs. Disadvantages include poor system scalability and high expansion cost for high-end models which are similar to outdated mainframe systems. With the technical development of complex instruction set rocessors, GIGABYTE’s new R292 series servers can support 4 processors in one single node to effectively mitigate the disadvantage of poor scalability while providing customers with high flexibility. Massively Parallel Processing (MPP) This system consists of many computing nodes with independent local memories and a microkernel operating system. As the system usually has no global memory and is centrally managed by a main operating system combined with the micro kernels of all nodes, it is also called Distributed Memory Parallel (DMP). Its advantage includes high system scalability and disadvantages include allocation of user memories to different nodes and difficulty in creating parallel computing programs. To address the issues of computation failure in a node, such system architecture has evolved to provide high availability and node computation tasks. That’s why the High Performance Computing (HPC) Cluster was born.

The HPC Cluster usually uses a cluster of nodes with the same hardware and operating system to create a parallel computing system via a network connection. Its primary advantages include extremely high flexibility and cost-effectiveness of computing capacity expan-sion through standardized host technology. Disadvantages include well-designed software suites – more specifically, parallelization – needed for optimal performance. Heterogeneous Computing At the current stage, it’s preferred to combine CPUs with GPUs, FPGAs or ASIC kernel acceleration cards for heterogeneous computing. In recent years, the conventional way of computation by utilizing CPU frequency and kernel count has encountered a bottleneck in its ability to dissipate heat. Meanwhile, GPU, FPGA and ASIC cards have increasingly evolved to a higher kernel count and better parallel computing power. As such a system that boasts a high overall ratio of performance/chip area and a performance/watt, the accelerated computation of science, business analysis and engineering application can be improved to a large extent. By combining the servers optimized for sequential processing from GIGABYTE with different types of acceleration cards in collaboration with thousands of small computing cores, heterogeneous computing has obviously become one of the mainstream high performance computing systems today.

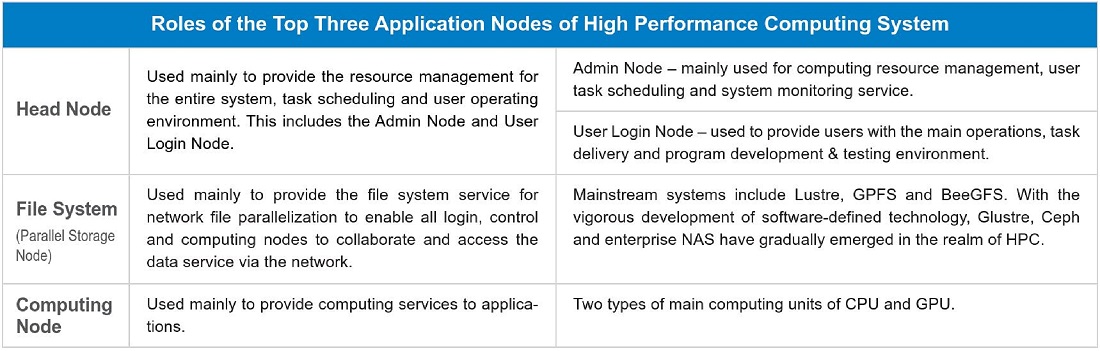

High Performance Computing System Whether it’s MPP or a heterogeneous computing-based high performance computing system, its nodes consist of three application types.

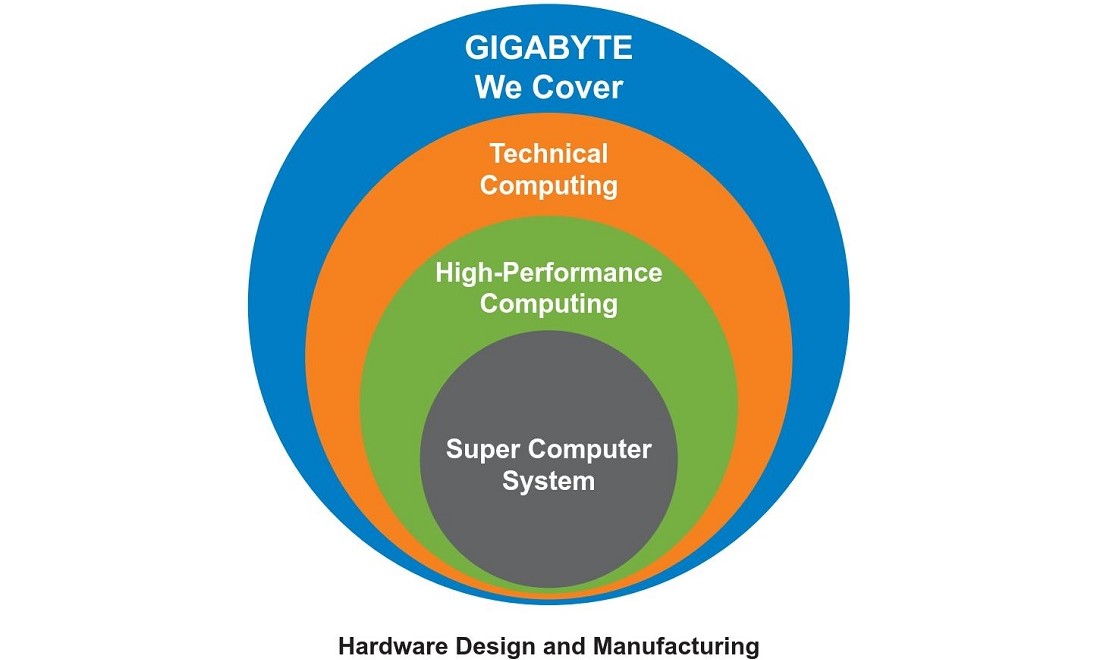

Based on our long-term tracking of technical developments and observation of market trends, we at GIGABYTE have been working on the product planning and deployment of high performance computing systems from an early stage in order to offer customers more flexible options by launching a wide array of products for different architectures and cluster nodes, including:

● R292 Series servers: support 4 processors for symmetric multi-processor architecture;

● H Series servers: are high-density server products for the mainstream dual-processor architecture that can be used as the cluster unit for high-density MPPs;

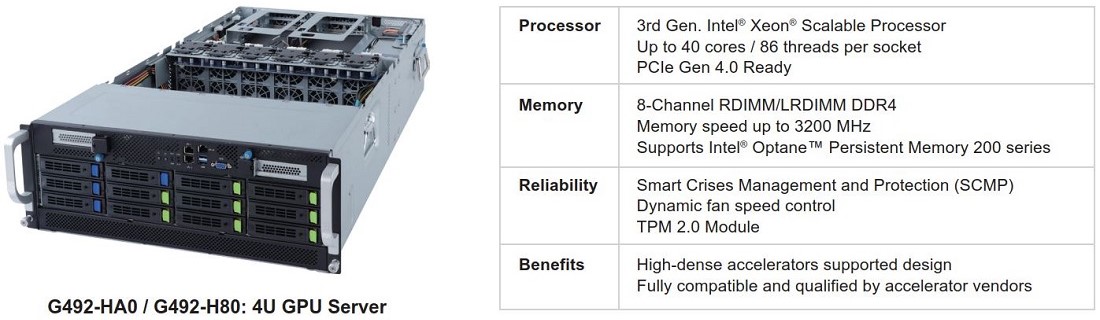

● G Series servers: have the acceleration card density and pervasive supportability better so than competitors and include support for well-known NVIDIA and AMD GPU products as well as Xilinx, Intel FPGA or QUALCOMM ASIC acceleration cards; and

● S Series servers: support 16-, 36- and 60-bay corporate class high capacity HDD storage models in addition to the S260, which is an all flash storage array system for the latest applications, which are applicable to different software-defined storage and meet the hardware requirements from different data pools.

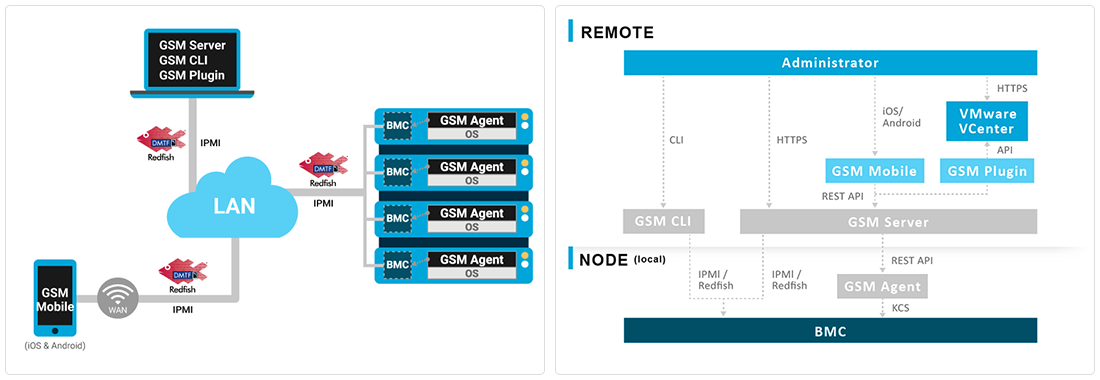

GIGABYTE’s complete server product line covers every aspect of building a high performance computing system by allowing various customers to acquire one-stop solutions. However, our server products have to support the development philosophy of different-source software suites to be used by mainstream operating systems as well as to conform to a full spectrum of software development environ-ments. When used in combination with our unique GIGABYTE Server Management (GSM) software suite, capabilities such as advanced self-monitoring, self-diagnosis, etc. will be available. High performance computing systems built with our servers have the following features:

● Support fast installation of system software and update delivery;

● Automatic management of system-wide computing resources and task scheduling;

● Real-time monitoring of system performance and auto event alert;

● Highly autonomous development environment for user parallelization programs and system management;

● High performance parallel file system service;

● On-demand, customizable and integrated system management service.

GIGABYTE – Hardware Manager Building a High Performance System with GIGABYTE Servers Data centers need to choose the right node builds or expansion systems based on the existing environment or application software. This means you must select server products most suited for your own needs.

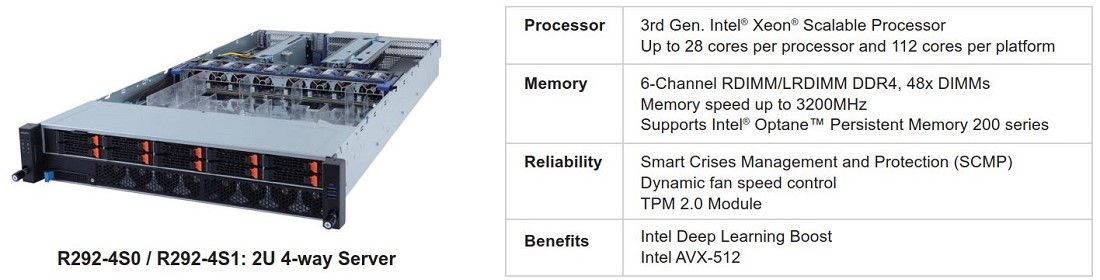

● R292 Series Servers for Symmetric Multi-Processing Architecture

The R292 series servers support four 3rd gen Intel Xeon Scalable processors, with each processor transmitting data and sharing workloads with the other three processors on the motherboard at a speed of 20.8GT/s. The groundbreaking computing power can be used for large-scale mission-critical applications and analysis of ever-growing data at an extraordinary speed.

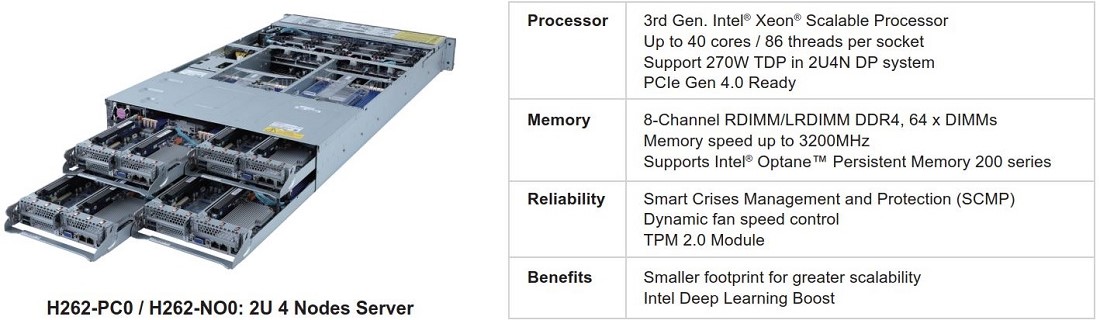

● H Series High Density Servers for MPP

Optimized for HPC and Hyper-Converged Infrastructure (HCI), the H series servers can accommodate 4 nodes in a 2U cabinet, with each node supporting 2 latest gen Intel Xeon Scalable processors to provide more cores and computing threads than conventional 2U cabinets. When a standard 42U server cabinet is used, in addition to optimizing the IT amortization for the data center space, at least four times more cores and thread computing units are available compare to a typical 2U rack-mounted host.

● G Series Servers for Heterogeneous Computing

The G series products are designed for heterogeneous computing architecture. Whether it’s GPU, FPGA or ASIC acceleration cards, the power supply and thermal requirements necessary for these acceleration cards have been thoroughly developed for all G series servers to ensure the reliability of ongoing system operations.

GIGABYTE’s servers provide flexibility in selecting high performance computing systems and better cost-effectiveness, as well as they ensure the reliability and operational efficiency once the products are implemented. Customers only need to focus on their core business-es’ innovation as we provide them with support and products for the digital transformation.

HPC Computing Cluster Heterogeneous Computing WE RECOMMEND

ProductR292-4S0

ProductH262-PC0

ProductG492-HA0 RELATED ARTICLES

Tech GuideWhat is a Server? A Tech Guide by GIGABYTE In the modern age, we enjoy an incredible amount of computing power—not because of any device that… Virtual Machine HPC Liquid Cooling

Success CaseSpain’s IFISC Tackles COVID-19, Climate Change with GIGABYTE Servers By using GIGABYTE, Spain’s Institute for Cross-Disciplinary Physics and Complex Systems is pitting… Scalability PCIe Big Data

Success CaseThe University of Barcelona Gets a Computing Boost with GIGABYTE Servers The Institute of Theoretical and Computational Chemistry at the University of Barcelona has… Parallel Computing Virtual Machine AI Inferencing

Success CaseTo Empower Scientific Study, NTNU Opens Center for Cloud Computing High performance computing has a critical role to play in modern-day scientific research. The… HPC Cloud Computing Big Data